Speech Recognition Accuracy: Metrics & Best Practices

Speech recognition accuracy is crucial in healthcare. Here's what you need to know:

- Word Error Rate (WER) is the main metric for measuring accuracy

- Medical term accuracy, speed, and noise handling are also important

- AI and machine learning are improving accuracy and efficiency

Key ways to boost accuracy:

- Use high-quality audio input

- Customize language models for medical terms

- Train on diverse speech data

- Implement quality control measures

- Continuously improve based on feedback

AI medical scribes are game-changers, offering:

- Fewer errors and more detailed notes

- Real-time data entry

- Integration with EHR systems

AspectCurrent StateFuture TrendAccuracy85-99% with human reviewImproving with AI advancementsSpeedReal-time transcriptionFaster processingMedical termsChallengingBetter handling with specialized modelsPrivacyConcerns with voice dataStronger safeguards being developed

The medical speech recognition market is growing rapidly, with a projected 15% CAGR through 2030. As technology improves, expect better handling of complex terms, accents, and background noise, along with stronger data privacy measures.

Speech Recognition in Medical Settings

Speech recognition in healthcare has improved, but it's not perfect. Let's look at the problems and how they affect patient care.

Common Issues in Medical Speech Recognition

Medical speech recognition faces some tough challenges:

- Medical terms are complex

- Accents can confuse the system

- Hospitals are noisy

A survey found that 73% of people said accuracy was the biggest problem. And 66% said accents or dialects caused issues.

Effects on Patient Care and Records

Speech recognition in healthcare has pros and cons:

Good stuff:

- Reports are done faster

- It's cheaper

- Doctors can do more

Not-so-good stuff:

- Important info might be wrong

- Someone needs to check everything

What ChangedHow Much It ChangedTime to finish reports81% fasterReports ready in 1 hourWent from 26% to 58%Average time for surgery reportsFrom 4 days to 3 daysReports done in 1 dayWent from 22% to 36%

These changes mean faster diagnoses and treatment. But mistakes can be dangerous. Get one number wrong in a medicine dose, and it could kill someone.

To be fast AND accurate, many hospitals use both AI and humans. AI writes the reports, then real people check them. This catches mistakes but still saves time.

"AI mistakes in healthcare can be really bad. We need a system doctors can trust." - Notable Author Name, Author's Title

As this tech gets better, we need to fix these problems. By making it more accurate and having people double-check, we can use speech recognition to help patients and make doctors' jobs easier.

Main Metrics for Speech Recognition Accuracy

Speech recognition accuracy is crucial in medical settings. Here's how we measure it:

Word Error Rate (WER)

WER is the top metric for speech recognition accuracy. It's simple:

WER = (Substitutions + Insertions + Deletions) / Total Words Spoken

Example: A doctor says 29 words, system makes 11 errors. WER = 38% (11/29).

Lower WER = better accuracy. But it's not perfect - it treats all errors equally.

Levenshtein Distance

This counts the minimum edits to change one word into another. It's the math behind WER, helping spot differences between spoken and transcribed words.

Medical Term Accuracy

In healthcare, nailing medical terms is key. Some systems use Jaro-Winkler distance to check how close transcribed medical terms are to the correct ones.

Speed and Response Time

Fast transcription matters in busy hospitals. Real-time or near-real-time performance is crucial, especially for live transcription during surgeries.

AspectWhy It MattersProcessing SpeedAffects real-world useLatencyImpacts user experienceOptimizationNeeded for quick, accurate results

Performance in Noisy Environments

Hospitals are noisy. Good systems need to work well with background sounds.

PlaceNoise ChallengeERAlarms, equipmentORMultiple voices, machinesPatient RoomsTV, visitors

In 2017, Google's voice recognition hit 4.7% WER. Humans? About 4%. Some commercial software? 12%.

"ASR transcription accuracy rates don't match human transcriptionists, leading to big errors in critical fields like healthcare."

This gap shows why we need to keep improving these systems, especially for medical use.

Other Accuracy Metrics for Medical Transcription

Medical transcription accuracy goes beyond basic metrics. Here are some key factors:

Phrase-Level Accuracy

In medicine, getting entire phrases right is crucial. It's not just about individual words, but how they form diagnoses, treatment plans, and instructions.

Think about it: "take two pills daily" vs "take too many pills daily". One small mistake could be dangerous.

Multi-Speaker Recognition

Hospitals are busy places. Lots of people talking during consultations or procedures. Good transcription needs to tell these voices apart.

Philips SpeechLive does this well:

- Handles up to 10 speakers in one recording

- Uses AI to figure out who's talking and when

- Labels speakers as Speaker 1, Speaker 2, etc.

This is great for things like patient interviews and team meetings.

Handling Different Accents

Doctors come from all over. So accent recognition matters.

A survey found these U.S. accents trip up AI the most:

- Southern

- New York City

- New Jersey

- Texan

- Boston

To fix this, companies need to train their systems on all sorts of voices:

- Different accents

- Various dialects

- Both men and women

- Young and old speakers

- Different speaking styles

Medical Vocabulary Coverage

Medical lingo is tough. Speech recognition needs to know a TON of specialized terms.

WhatWhy It MattersSpecialized TermsFor accurate diagnoses and treatmentsAbbreviationsDoctors use these a lotDrug NamesGetting these wrong could be dangerousAnatomical TermsFor describing patient conditions accurately

Here's something cool: There's a new trick called Accent Pre-Training (Acc-PT). It uses some fancy tech to help AI understand accents better. With just 105 minutes of speech, it improved accent recognition by 33%!

"When speech recognition can't understand accents, it's frustrating. It leaves out people who don't talk like the AI was trained to expect."

This shows we need to keep working on making medical transcription better for everyone, no matter how they speak.

Tips to Improve Speech Recognition Accuracy

Want better speech recognition for medical transcription? Here's how:

Clean Your Data

Garbage in, garbage out. Start with clean audio:

- Cut the noise and echo

- Make sure you can hear the speaker clearly

- Trim dead air at the start and end

- Use WAV files, not MP3s

Pick the Right Model

Choose models built for medical talk. Train them on:

- Tons of medical terms

- Different accents and speaking styles

- Various medical specialties

Tailor to Medical Fields

One size doesn't fit all. Customize for different areas:

FieldWhat to Focus OnRadiologyImage termsOncologyCancer lingoPediatricsKid health stuffCardiologyHeart talk

Keep Learning

Your model should never stop improving:

- Retrain with fresh data often

- Listen to what healthcare staff say

- Stay on top of new medical terms

Play Nice with Other Systems

Make sure your tech fits in:

- Match EHR data formats

- Follow HIPAA rules

- Build APIs for easy data sharing

Testing Speech Recognition for Medical Use

Testing speech recognition for healthcare isn't easy. It requires a smart approach to ensure the tech performs in real medical environments.

Selecting Test Data

Choose test data that mirrors actual medical conversations:

- Use real patient-doctor talks

- Include medical lingo

- Cover various accents and speech patterns

A study of 100 therapy sessions (2013-2016) showed why this matters. It used genuine conversations between 100 patients and 78 therapists to test the system.

Using Weighted Averages

In medical transcripts, some mistakes are worse than others. That's where weighted averages come in.

Here's the breakdown:

Error TypeWeightPatient name5Medication dose4Diagnosis3General words1

This method zeroes in on the most critical parts of the transcript.

Testing in Noisy Conditions

Hospitals are noisy. Your tests should be too.

"ASR accuracy can swing wildly, with word error rates from 5% to 30%", says a recent ASR systems study.

To test right:

- Add background noise to test audio

- Use real medical setting recordings

- Test with varying noise levels

Take the SickKids hospital study. They tested AI in actual ER conditions. The result? AI could speed up care for 22.3% of visits, slashing wait times by nearly 3 hours.

sbb-itb-2b4b1a3

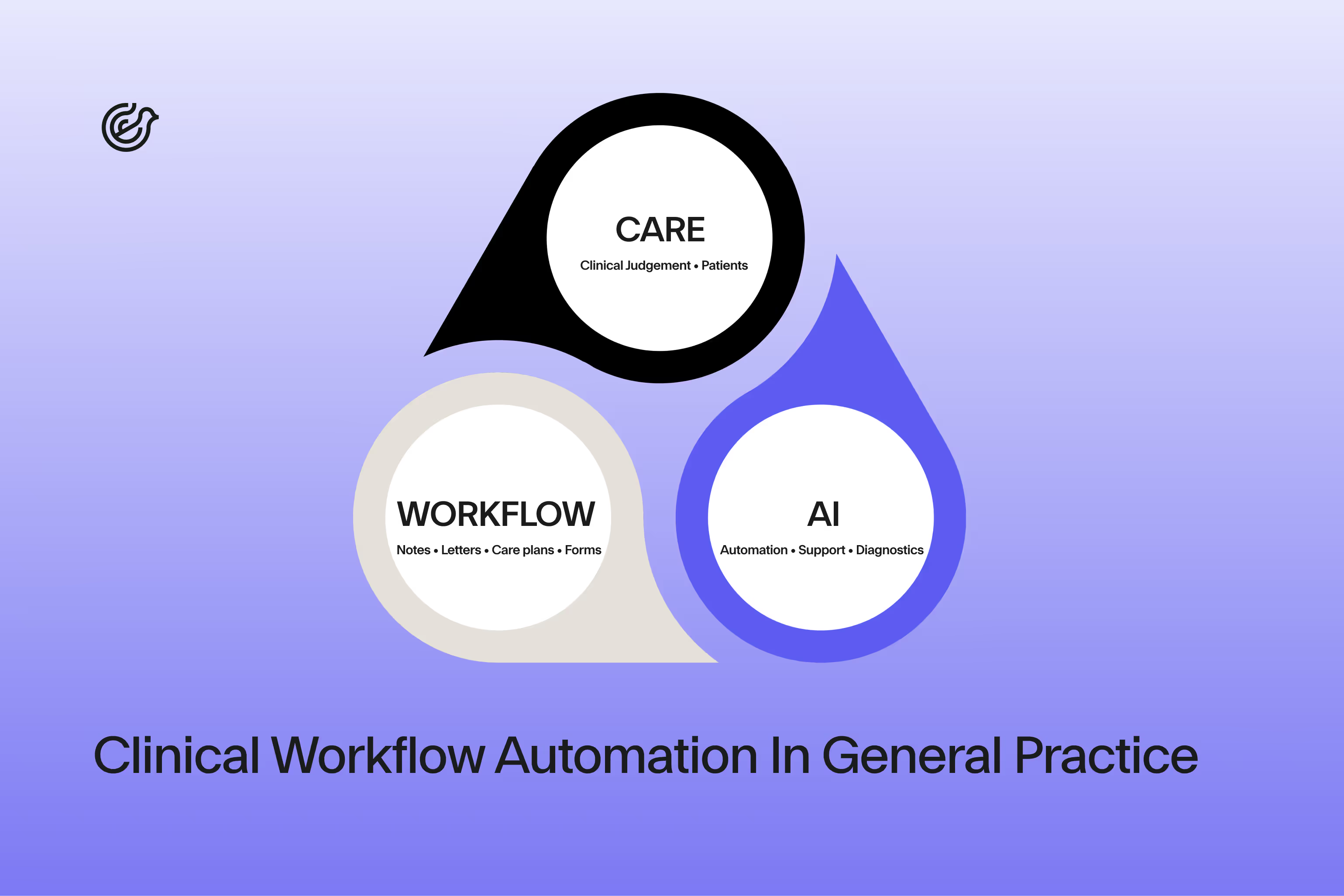

Case Study: Lyrebird Health's Accuracy Approach

Lyrebird Health is shaking up medical transcription with its AI-powered scribe. Here's how they're nailing speech recognition accuracy in healthcare:

AI Scribe Features

Lyrebird's AI Scribe:

- Spits out full notes in 10 seconds flat

- Transcribes entire consults in the background

- Auto-creates detailed notes, referrals, and patient letters

Plus, it plays nice with Best Practice, a top patient management system. Doctors can grab consult notes right in their usual workflow.

Accuracy Wins

Lyrebird Health's accuracy game is strong:

BenefitImpactTime savingsLess paperworkPatient engagementBetter rapport during consultsDocumentation qualityMore thorough, accurate notesOperational efficiencyLower costs for practices

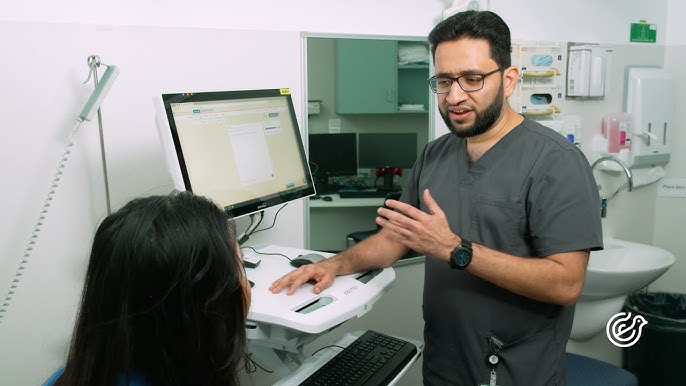

Dr. Ryan Vo, GP and co-CEO of Nuvo Health, says:

"The notes now are very comprehensive. [Doctors] feel much more secure knowing that the critical things in the consult have been recorded and documented."

That's a big deal for capturing key medical info accurately.

Made for Medical Staff

Lyrebird Health tailored their solution for healthcare pros:

1. Personalized doc styles

The AI matches each doctor's writing style.

2. Medical lingo

It knows complex medical terms and abbreviations.

3. Fits right in

Slides into existing patient management systems without a hitch.

Kai Van Lieshout, co-founder and CEO of Lyrebird Health, puts it this way:

"The vision for Lyrebird Health is to empower healthcare practitioners by streamlining administrative tasks, enabling them to dedicate more time to patient care."

Current Issues in Medical Speech Recognition

Medical speech recognition faces unique challenges. Here's what's holding it back:

Handling Medical Terms and Abbreviations

Medical language is a tough nut to crack for AI. Why?

- It's packed with specialized terms

- Abbreviations change meaning based on context

A survey found 73% of users say accuracy is the biggest hurdle. Jargon and field-specific terms are the main culprits.

Managing Conversation Interruptions

Doctor-patient talks are messy. They're full of pauses, casual speech, and background noise. These trip up AI transcription big time.

Ensuring Privacy and Security

Healthcare data is sensitive stuff. Speech recognition systems need to:

- Follow strict privacy rules

- Keep patient info safe

- Handle voice data with care

Voice is biometric data, raising big questions about consent and protection.

ChallengeImpactSolutionMedical TermsHigh errorsMedical datasetsInterruptionsMissed textBetter noise filteringPrivacyLimited dataSynthetic data

But there's progress:

outperformed other APIs in handling medical terms, with a 21.9% Medical Term Error Rate for English.

Nabla combined general and medical speech recognition strengths. Their tests showed off-the-shelf solutions often fall short in healthcare.

The path forward? AI needs to get smarter about what matters in medical speech. As one expert put it:

"Voice recognition is making waves in healthcare, but it can't pick up on a physician's intent."

To improve, we need:

- Better training data with diverse accents and medical terms

- Improved noise-handling

- Stricter privacy safeguards

What's Next for Medical Speech Recognition

Medical speech recognition is changing healthcare documentation. Here's what's coming:

New AI and Machine Learning Tools

AI and machine learning are pushing speech recognition forward:

- Deepgram's Nova 2 Medical Model shows a 16% boost in Word Recall Rates and an 11% drop in Word Error Rate compared to general systems.

- Nova 2 can transcribe 5 to 40 times faster than other options, allowing instant documentation during patient visits.

- AI models trained on large medical datasets can better handle complex terms and acronyms.

Combining with Other Medical Tech

Speech recognition is joining forces with other healthcare tools:

IntegrationBenefitEHR systemsAutomatic entry of patient dataTelemedicineTranscription of remote consultationsRadiologyFaster, more accurate reporting

"Voice recognition is making waves in healthcare, but it can't pick up on a physician's intent." - Healthcare AI Expert

To fix this, future systems might:

- Use context to understand medical intent

- Integrate with decision support tools

- Offer real-time suggestions during patient care

The medical speech recognition market is growing fast, with a projected 15% CAGR through 2030. Why? Because:

- Doctors want efficient documentation

- AI is becoming more common in healthcare

- Hospitals need cost-effective solutions

As the tech gets better, we'll see:

- More accurate transcription of complex medical terms

- Better handling of accents and background noise

- Stronger privacy safeguards for voice data

These improvements will help with current issues like managing conversation interruptions and keeping data secure.

Conclusion

Speech recognition accuracy in medical settings is crucial. Here's what we've learned:

Key Metrics for Accuracy

- Word Error Rate (WER)

- Medical Term Accuracy

- Speed and Response Time

Best Practices for Improvement

1. High-Quality Audio Input

Use noise-canceling mics, keep it quiet, and record at 16,000 Hz or higher.

2. Advanced Language Models

Customize for medical lingo and update regularly.

3. Diverse Training Data

Include various accents and speech patterns.

4. Quality Control Measures

MeasureImpactMulti-level review process30% fewer errors in medical docsStyle guidesUsed by 85% of transcription companiesHuman oversight15% boost in legal transcription accuracy

5. Continuous Improvement

Let users fix mistakes and analyze feedback.

AI Medical Scribes: Game Changers

They're shaking things up:

- Fewer errors, more details

- Real-time data entry

- Play nice with EHR systems

"Voice recognition is making waves in healthcare, but it can't pick up on a physician's intent." - Healthcare AI Expert

What's next? Systems that get medical intent and work with decision support tools.

Looking ahead, expect better handling of complex terms, accents, and background noise. Plus, stronger privacy for voice data. These upgrades will tackle current issues and boost healthcare documentation efficiency.

FAQs

How do you measure speech recognition accuracy?

Speech recognition accuracy is mainly measured using Word Error Rate (WER). It's pretty simple:

WER = (S + D + I) / N * 100%

Where:

- S: substitutions

- D: deletions

- I: insertions

- N: total words in the reference

Lower WER? Better accuracy. A 5% WER means 95% of words were spot on.

How to measure speech recognition accuracy?

WER is the go-to, but other metrics can paint a fuller picture:

- Medical Term Accuracy: How well does it handle doctor-speak?

- Speed: How fast does it spit out results?

- Noisy Performance: Can it handle background chaos?

Mix and match metrics based on what you need.

What's the main accuracy metric in ASR?

It's all about WER. It breaks down errors into three types:

ErrorWhat It MeansExampleSubstitutionWrong word"heart" for "hurt"DeletionMissing wordSkipping "the"InsertionExtra wordAdding "a" where it's not needed

How do you check transcription accuracy?

Here's the drill:

- Pick some audio samples

- Get humans to transcribe them (the gold standard)

- Compare ASR output to human version using WER

- Throw in extra checks if needed (like medical term accuracy)

"The less fixing needed after AI does its thing, the faster and cheaper it gets." - Happy Scribe

Happy Scribe claims 85% accuracy in 5 minutes with AI alone. Add human touch-ups? It jumps to 94-99%.